Ask any pilot,

Don't care about the gravel,

Just need the runway

Since it doesn't seem to be that well documented online, I thought I'd document my recent work in trying to get the Leap Motion working as a cheap, short-range depth sensor. I remember being really excited about the sensor when it was first introduced back in 2012, especially since my research was on manipulation, and our lab was just getting our Kinect up and running. The Leap was sensationalized (in more than one blog/publication) as a next-generation, hand-held Kinect with greater accuracy and at about 1/3 the cost. I was personally really hyped because I had read that one of the founders had a PhD in fluid flow, and I had hoped that he had figured out some way of utilizing particle motion to improve depth sensing. And....

What the Leap Isn't

....Not quite. Sometimes things that appear to be too good to be true are. It's definitely not an RGB-D sensor like the Kinect. It doesn't project a grid of points like the Kinect, nor does it do any time-of-flight-based depth detection. Given that reliable depth sensors other than the Kinect run for $300-1000 to this day, I guess that shouldn't be too surprising.

Initial impressions of the device were pretty underwhelming, and it introduced me to the notion of the gorilla arm effect. Out of the box, it was pretty glitchy with the original SDK, and it couldn't track anything other than hand motions. Not only that, but the hand tracking wasn't particularly good, and there'd be noticeable lag in performance. It's definitely much improved with the most recent SDK update, but to date I haven't come across anyone or any article touting the device as the next big great interface anymore.

What the Leap Is

That said, after I dug into the hardware and played around with its developer API a little bit more, I have to say that it's still a pretty innovative little bugger, and perhaps there's more to it than people give it credit, even if it won't ever replace a touchscreen or a mouse (and it won't, not even close).

The Leap is essentially two webcams with fish-eye lens, three infrared LEDs, and a plastic diffusing panel. This combination is actually pretty neat. Granted, the following analysis (if you can call it that) is mostly quick postulation: Two webcams are needed to perform stereo vision in order to extract depth information, and they need to both be fish-eye in order to detect the full hand workspace at close-range, as per the Leap Motion's use case. The infrared lighting in combination with the diffuser helps ensure that only items within about a 2 ft range are detected, and it also seems to remove color as a potential problem:

IR means (presumably) that we don't have to worry as much about skin tone or color-based occlusion (ie. hand in front of bare skin or skin-colored clothing), but reflective surfaces become more problematic, and the Leap goes as far as to include a brightness calibration routine to help minimize that issue. This (probably) also means that the sensor's not so great (if at all functional) in daylight, but given that it's designed for office use and meant for hand tracking (and not hand displaying), these were some pretty smart design decisions to use low-tier tech and keep costs down. It may not be good for continued interface use, but I actually think it'd be pretty ideal for intermittent browsing or video playback. It might actually make the most sense as an augmented and complementary input option, especially if the arrangement with Asus actually works out, though there hasn't been news on this front for more than a year. A lot of the consumer complaints would be moot if developers actively went away from trying to make "clicks" work via positioning. I don't see anything wrong with broader gestures (ie. use a repeat swipe motion or spread-hand configuration as a replacement for clicking via a fingertip motion).

Given its limitations, what's it good for aside from its advertised use cases?

What I managed to Finagle out of the Leap

As a pseudo-hardware-hacker (or hack =P) not particularly interested in hand-tracking, the Leap wasn't really useful to me until the latest developer SDK exposed the image interface, allowing us to extract the raw images. Given its size, I was recently thinking that it could be useful as part of a camera-in-hand configuration with our lab's manipulation setup. I currently have a closed-loop system where a Kinect mounted above our setup detects objects on a table and tells our robotic arm where to move to try and grasp objects. We specialize in hand design, so our robotic hands have the unique aspect of passively accommodating sensing/positioning errors, but occlusion and sensing errors due to the mounted location of the Kinect still poses problems from time to time. It also means that I have to move the arm and hand out of the way of the workspace to perform each table scan, and it's almost impossible to reliably detect anything smaller than a computer mouse. Having a camera in-hand would mean that I could scan as I move, and the limited range of the Leap actually helps me a great deal, as I don't really have to segment items in the scene until they're actually physically close and in the path of the arm. In the best case scenario, the Leap could serve as a really shitty LIDAR that fits in the palm of the robotic hand.

A couple of people had begun work on getting useful disparity images from the Leap (1,2), and there's plenty of existing documentation on stereo vision processing with OpenCV (particularly this guy), so luckily I didn't have to start from scratch, but at the end of the day (or week, in this case), my results were a tad underwhelming:

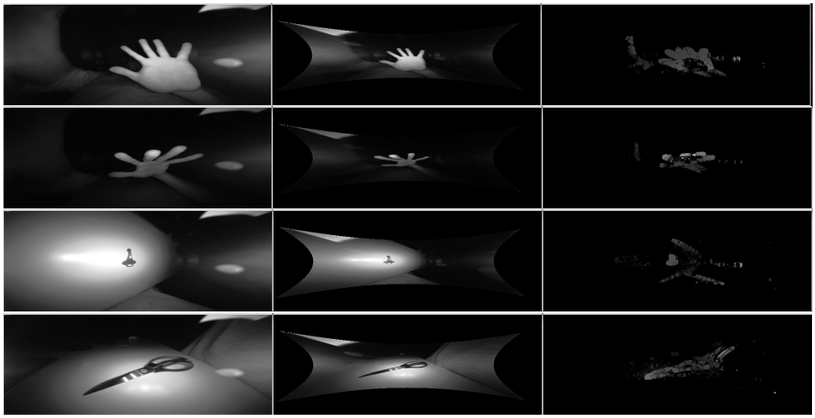

From left to right, there's the raw left image, the rectified left image, and the resulting disparity image (the right image isn't shown). As you can see, the Leap works a lot better if it doesn't have to deal with a reflective background surface, but it still does a passable job of picking out the objects in the field of view, which is nice. For my application, nothing really shows up until they're just slightly outside of "grasping range" for our lab's robotic hands, which is a bigger plus. However, I'm struggling with the glare from the IR LEDs and the poor resolution of the cameras (each are 640x240). I managed to reduce the glare (especially in the middle of the viewport) by taping over the LEDs on the outside of the diffuser. Without tape, the middle of each image was essentially a bright spot that made it indistinguishable in generating the disparity image. There's also a problem with the auto-lighting adjustment from the Leap, as the contrast seems to adjust itself every 10 seconds or so. It doesn't seem like I have the option to adjust that in the current API.

Nitty Gritty

[code]

There's 3 main steps to generating the disparity map:

- Extract the images from the Leap

- Undistort the Leap images

- Run a stereo vision algorithm between a pair of images

Resorted to using ctypes to convert the Leap raw image into a useful structure in Python at a framerate suitable for real-time imaging. Others seemed to have issues with this approach, but it seemed to work fine for me, although it would indeed occasionally show a few corrupted rows. The odd thing is that when I compared the output array from the ctypes approach to one I copied pixel-by-pixel (which always displays uncorrupted), they were always equivalent. Frustrating. But bearable.

For undistortion, I had initially ran both raw images through the default OpenCV checkerboard calibration routine (1, 2), but the result was blocky and still visibly distorted (it was also rotated and flipped for some odd reason). I ended up using the included warp() functions from the Leap API. It turns out (from my limited experimenting with the function), it returns the same value regardless of image content (which you would expect), so I create or load the appropriate undistortion mapping at initialization and then use OpenCV's remap function to transform the images quickly. I have no guarantee regarding the accuracy of the undistortion, but it looks like the example given in the API documentation, so I went with it.

As for the final disparity image, I don't have a great handle on the stereo vision parameters yet, but the above was about as clean as I could get it. For my purposes, it helped to turn the texture threshold way up, but otherwise I used mostly standard/default stereo parameters. I'm thinking that the resolution limitation is my biggest constraint right now, especially since the remapping essentially down-samples a good portion of the image (I think). Running the stereo vision algorithms on the distorted raw image might produce inaccurate, but less noisy results, so that may be an alternative for future work.